KPIs, OKRs, SLIs, SLOs. Just a few metrics that potentially can be used within an organization. In recent years, an increasing emphasis has been placed on measuring and monitoring results. An understandable movement and one I wholeheartedly support. Often, I am helping organizations to become more aware of the impact they create by measuring the impact of their actions. Does it mean that metrics are simply great? Well not necessarily. Metrics have dysfunctional effects. Take for example the Cobra Effect.

At some point in history when the British ruled India, the colonial government were concerned about the number of venomous cobras. No one likes to be bitten by a poisonous snake, right? They decided to put a bounty on every dead cobra. Intended effect was that people would capture and kill the snakes reducing the population of cobras. However, another thing happened. People started to breed cobras intentionally, so they could kill them and receive the bounty. When the colonists learned about this, they stopped the reward program. Now with the cobra’s not having any value the breeders released them. Oops, the initial problem even became a larger problem. This is anecdotally called the Cobra Effect.

Metrics and the enforcement of behavior have dysfunctional effects. When designing or evaluating measurements it is important to be aware of these dysfunctional effects, so one can obtain the highest value from using measurements as KPIs or SLIs. In this article I will dive into the negative consequences of performance measurements.

From complex to overly simple

Looking towards the negative consequences of metrics we can observe passive and active effects. Passive effects are things that happen simply because of the institution of measuring performance. It happens in any case. Power (2004) describes three fundamental concerns in his work that I would attribute to the passive effects of using metrics.

According to Power reductionism is inherently part of measuring. To make sure we can count things we have to simplify the objects we are counting. For example, horses are not individual identical but to count them we reduce them to a classification of a horse. It means we emphasize the most common similarities, while ignoring the less common similarities. The consequence of reduction is that we are simplifying complex problems. Great for the purpose of control, but not the best reflection of reality. The climate problem is being reduced to a measure of CO2 emissions, but that creates a new set of problems on its own.

Of course, IT organizations are not immune to this phenomenon. In IT we tend to prioritize simple solutions for complex problems. The research captured in Accelerate discusses the four dev-ops metrics. One of them is Deployment Frequency. A measurement that factually only monitors the number of deployments. Implicitly attributing value delivery or other values to a deployment. All these implicit expectations might be true, but it is even likely it is not. Deploying more often does not equal generating more value from the solution. Measuring and steering solely on this measurement yields limited result. This can be mitigated by using Pairing Metrics. In short this means adding a metric that measures the negative result or gaming effect of the initial metric. For example, if I would measure the number of sales quotes provided, I would also measure the success rate on those quotes to prevent sending out many quotes that have no chance in being closed.

Evaluating a measurement full of assumptions

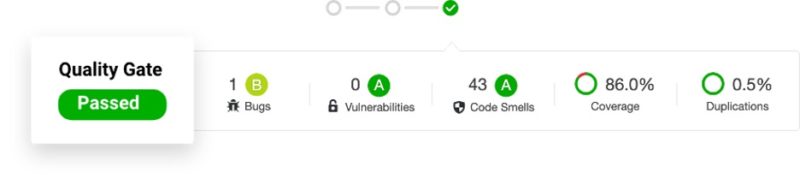

Nowadays, we are not only evaluating direct observations, but metrics that are based on ratios or indexes of those observations. Power calls this second-order measurements and sees them as another fundamental concern. An example of a second-order measurement can be found in the analysis report of SonarQube.

Source: https://www.sonarqube.org/features/quality-gate/

The A-rating on code smells is a second-order measurement. It is derived from several observations (first-order metrics), codified by rules, and calculating the outstanding remediation cost. The rating given by SonarQube in this case is an A. That probably feels reassuring if that is your code being analyzed. However, the ratios behind the calculation might not be suited for your case. They might hide big problems while relying on the maintainability rating. The Big Short displayed the risk of the never-ending trust in credit ratings whilst the underlying mortgages were rubbish. Leading us into the financial crisis. It is important to understand what’s behind a second-order measurement, how it’s designed, and if the ratios and calculations used suits the context of the organization.

Gaming the measurement

Other concerns regarding effects can be found in active manipulation of measurements or output. Van Dooren (2006) describes in his research ways to manipulate a measurement. Of course, this can simply be done by manipulating the data. Meaning you enter something else then what you observe. Other, less obvious ways are having different definitions or concepts on the measurement. The number of observation points could play a huge role as well. In the Netherlands we have intense debates regarding the emissions of nitrogen. In the beginning the number of measuring units where low, whilst having an impact on the policy being created on the entire country. One could argue the measurements and consequences of those measurements are in disbalance to the lack of observation points. If it has been intentional or not is not even the question. Whilst designing metrics the number of observations should be in proportion with the consequences of that metric. An ecommerce company that only uses the revenue of Cyber Monday to forecast the revenue for next year will be having a skewed forecast.

Output measurement comes in various forms. Measuring productivity by lines of code, # of Pull Request’s or story points allows for easy manipulation of the data. Mitigating manipulation of output can be achieved by using Pairing Metrics. When designing metrics, it would be wise to choose outcome-oriented metrics over output-oriented metrics in any case. Measure what you achieve over measure what you do. Rather measure the increase in conversion then the number of features delivered.

Another interesting way of manipulation is measure fixation. It states that by measuring an output it could lead to a higher value of the output. For example, if we would appraise a street full of homes. All houses are awarded with a higher value. As a result, the value of a home could increase because all its neighbors have been awarded with a higher value.

Having metrics could lead to gaming of the behavior. For example, a sales department could take it slow in December to prevent a (too high) raise of the target for the next year. Budget-oriented organizations might be aware of the urge to spend all budget at the end of the year to prevent a budget cut next year. A great example of output manipulation can be found in the Cobra Effect.

Still, organizations should use measurements

To reiterate, I don’t think metrics are a bad thing. Please, use them. However, to be able to design a metric system and evaluation of those metrics, it is important to realize where the system can be manipulated. Dysfunctional effects are part of institutionalizing metrics. The trick is to ensure that these effects do not get in the way of the goal of the organization. Pairing Metrics, for example, can help to prevent unintended side-effects of a metric. Gamification of measurement is easily done, especially when metrics are tied to rewards and performance. One must be careful to apply incentives on a single metric. Rather use a set of well-balanced metrics to incentivize performance. In the end, the metrics, the incentivization, and evaluation of measurements should all be aligned towards the goal of the organization. Organizational Sensing can help to steer the organization.

Literature

Power, M. (2004). Counting, control and calculation: Reflections on measuring and management. Human relations, 57(6), 765–783.

Van Dooren, W. (2006). Performance measurement in the Flemish public sector: A supply and demand approach.

Recent Comments